Delay analysis

Delay refers to a pause or a time delay introduced in the execution of a program.

It is commonly used in embedded systems programming or when working with certain microcontrollers. The purpose of the delay function is to introduce a pause or delay in program execution for a specified amount of time.

You can use a combination of loops and system-specific functions to implement a delay function in C.

Example:

Let’s use for loop to implement the delay.

for(uint32_t i=0; i< 300000; i++);

Here I created a variable i=0 and randomly used one number, I used 300000, and i++. So, later we can measure how much delay it introduces by using some logic analysis.If you are in the market for superclone Replica Rolex , Super Clone Rolex is the place to go! The largest collection of fake Rolex watches online!

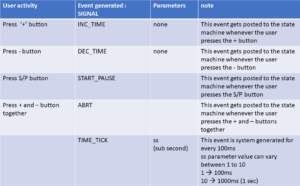

Look at the SWV ITM Data console in Figure 1, I press the key1, here we can see that it printed 1. I press the asterisk(*), and it printed that. But I pressed one time, and it actually printed twice. So, maybe we should play with the delay. And now let me press some more buttons, it is printing correctly. That’s great.

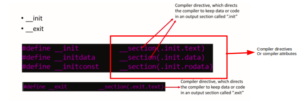

Let’s see some instruction-level analysis here. The assembly-level implementation of this for loop is shown on the right-hand side.

Here you can see that this loop executes 7 instructions for 1 iteration of a for loop.

Now we can approximately calculate the delay introduced.

The processor runs with 16 megahertz of the internal RC oscillator. So, the processor is actually executing the instructions and the frequency of the 16 megahertz. So, that’s the speed of the processor in our STM32 board.

Let’s assume one instruction takes 1 clock cycle.

That means 1 instruction is equal to 0.0625 microseconds. So, this is just an assumption.

So, we know that for one iteration of a for loop it takes 7 instructions. 7 instructions are equal to 0.5 microseconds.

That means, 0.5 microseconds is for 1 iteration of the for loop.

How about 1000 microseconds?

1000 microseconds means 1ms(1 milliseconds), it would be 2000 iterations. So, 2000 iterations of a for loop generate 1 millisecond of delay. That’s why for 150 milliseconds how much? 150 x 2000 = 300000.

That’s why I used this count in the for loop. That means this for loop will cause approximately 150 milliseconds of delay.

FastBit Embedded Brain Academy Courses

Click here: https://fastbitlab.com/course1