Active Objects

This article delves into the concept of Active Objects and how they can be effectively applied in software design. We’ll discuss the limitations of traditional designs and then introduce the Active Object paradigm as a solution.

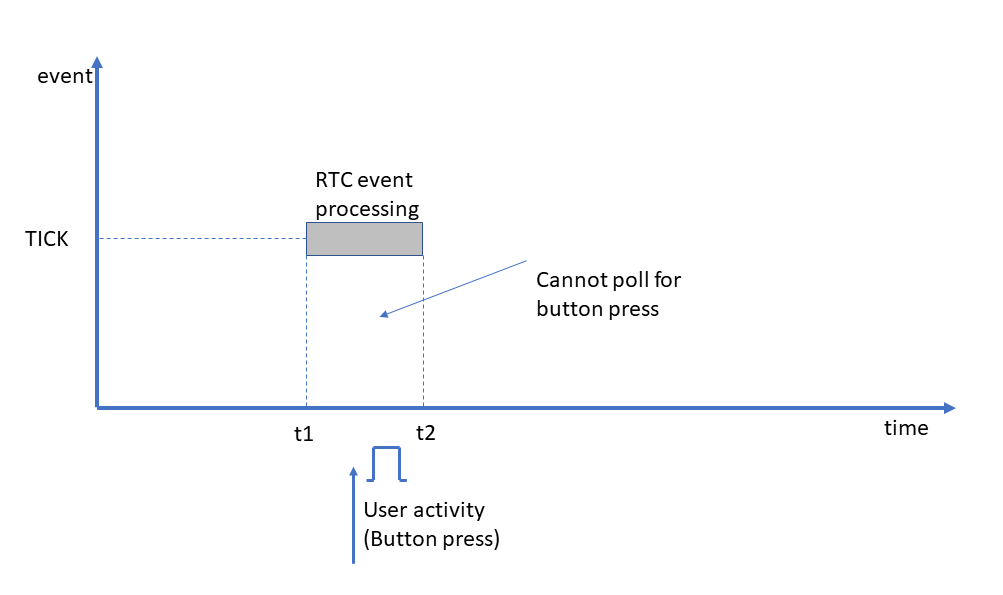

In our application, we are actually polling for the button press. There is a code that polls all the pins connected to the buttons. And doing such a design approach may lead to some issues, like, take the case of processing an event.

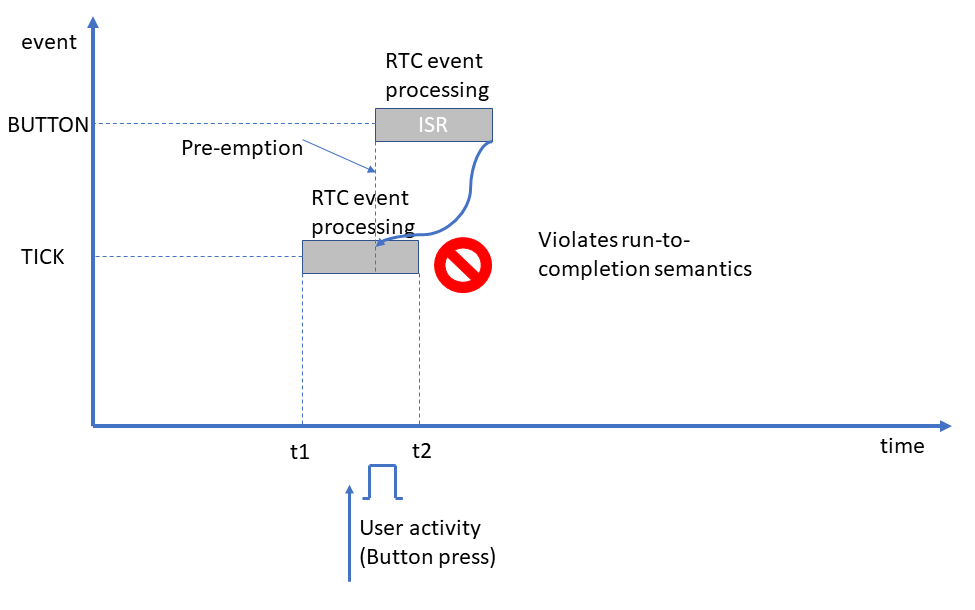

For example, let’s say our application is busy in processing an event called TICK. And while it is processing, we will not be polling for the button press. If any user activity happens between t1 to t2, like pressing a button or something else, then we will lose that event, which is happening in our application.

How do you solve this problem?

Solution: Button Interrupts:

Instead of polling for the Button press, you implement Button interrupt. Let’s say the application is currently busy in processing the TICK event. And if a Button interrupt occurs, then the microcontroller will start executing the ISR, and there will be a pre-emption.

And inside the Interrupt service routine, you process that event, which is related to the button. But, this is wrong because this violates run-to-completion semantics. Because, when you are processing something, when you are in the middle of an RTC step, then you have to complete it before processing another or next RTC step for another event. That’s why this approach also we cannot use.

This clearly indicates that whenever ISR runs here, we should not start processing the RTC step related to that event. What we should be doing is we should queue that event. And the ISR queues that event and ISR exits.

And when the application completes this RTC step, it can you know get the event from the queue, and it can process the next RTC step. That’s why we have to introduce an event queue to our application. That is a very important part of the application. We didn’t implement that in the previous exercise.

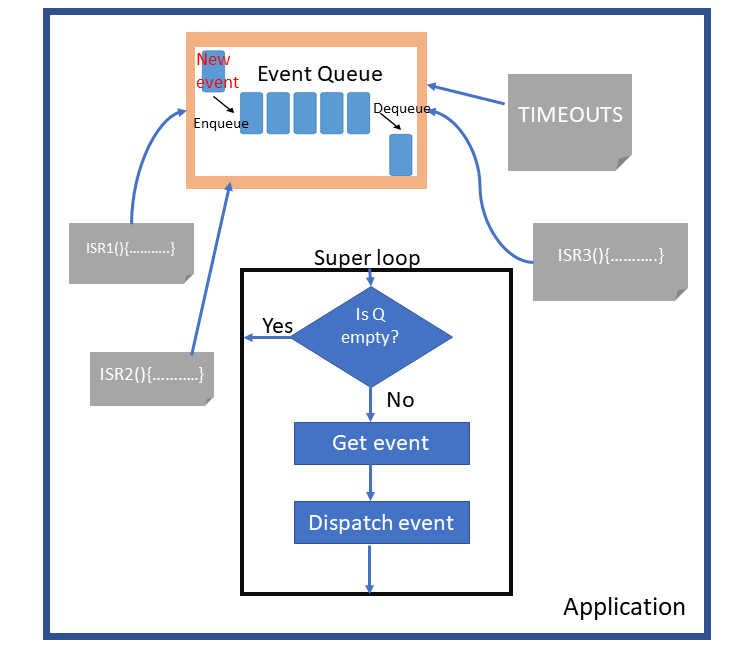

Now, your application has an Event queue. A queue is nothing but a data structure, first in first out (FIFO) data structure, where you can Enqueue new events and Dequeue the old events.

All these ISR1, ISR2, ISR3, and TIMEOUTS are event-producing points of your application. All these event-producing points post events to this Event queue, and your application may have one super loop, like a loop function in our Arduino application. Which check for the queue, whether the queue is empty or not. If in the queue is not empty, then it extracts the event (Get event), and it processes or dispatches that event to the state machine (Dispatch event).

The state machine completes the associated RTC step, and the state machine returns, and the loop continues like that.

By introducing an event queue, you can get rid of that problem. I mean, the problem means you will not miss any events. You can always keep posting the new events asynchronously to the Event queue.

What if your application has many state machines?

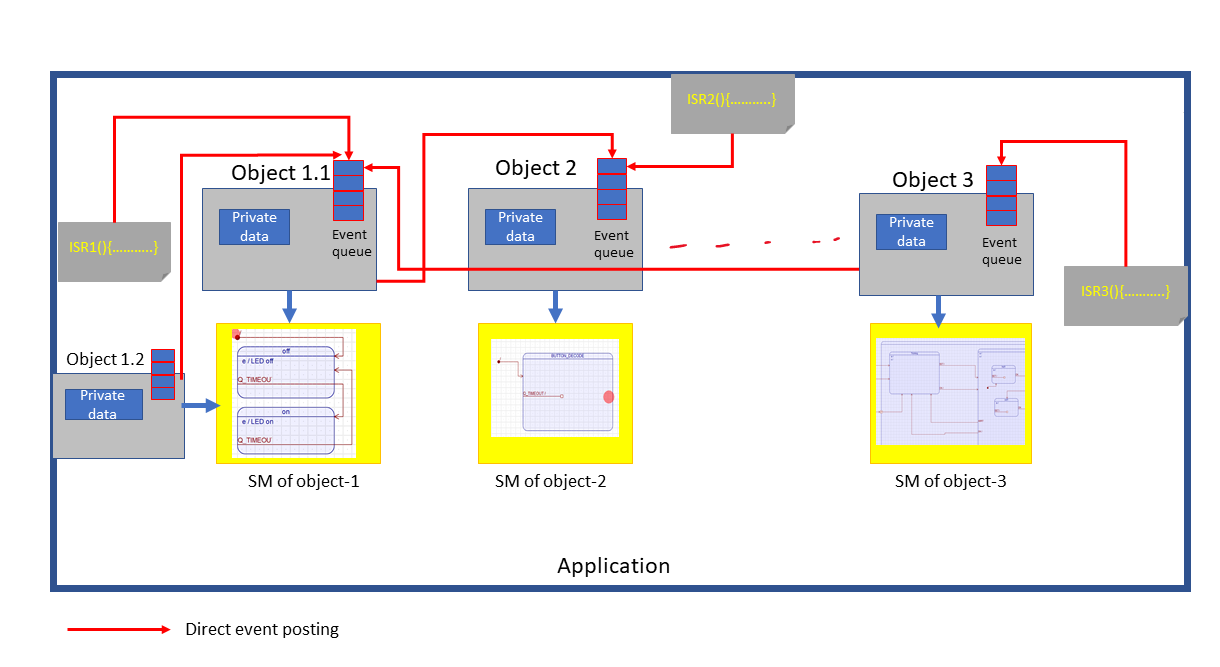

It is also possible. Let’s consider an application(Figure 4) that has many objects or many classes. An application could be a collection of many classes, each class may implement some application requirements. There are many classes, then there could be many class objects. And you already know that a state machine can be used to model the behavior of a reactive object.

That means there could be many state machines in the application. If there are many state machines, then for all the state machines using one event queue may not be a good idea, in that case, each object can host its own event queue like this. And it can have its associated state machine, which models that object behavior, and each object may have its private data, like that.

And in the application, there could be many event-producing points like ISRs. Objects can also produce events, and these event-producing points can do direct event posting to the event queues of the object. An object can be an event-producing point, and it can post an event to another object via the event queue of that object.

After that, each object can have its own thread of execution. You can launch the state machine of this object1.1 in one separate thread of the RTOS, and you can launch this state machine of this object 2 in another thread of the RTOS, which is also possible. Each object can have its own thread of control or own thread of execution. Such a design approach is called Active object approach.

Here, each object can have its own behavior. Behavior is nothing but a state machine. And this objects behavior completely depends on the present state of its state machine (SM of object-1) that is not influenced by another object.

Another object can do that another object will not see this state machine. That’s why it cannot influence this state machine. If one object wants to share any data with another object, then it can post the event to another object, or it can take the use of message passing techniques, like message queues of the underlying RTOS to share the information in a thread-safe manner.

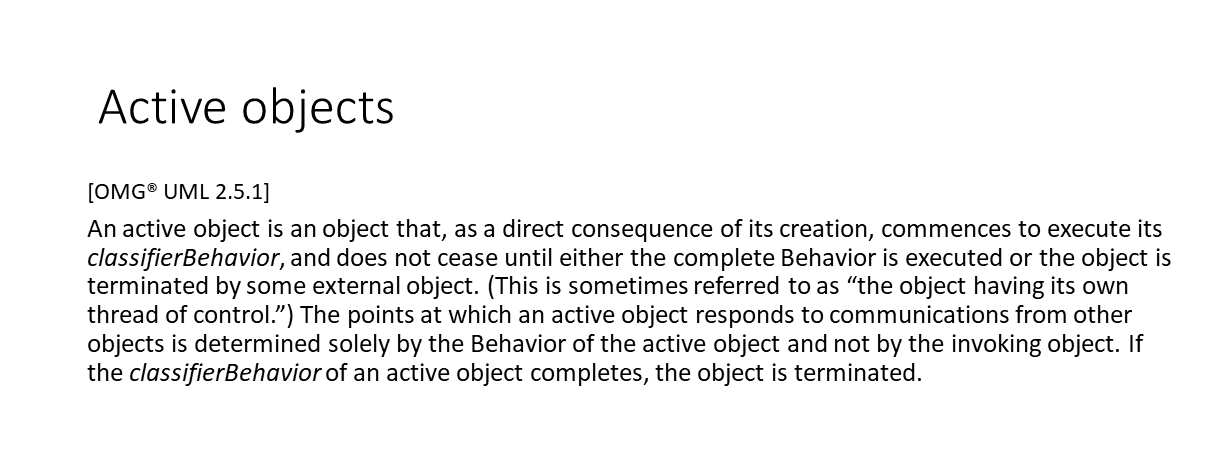

The Active objects specification says that the active object is “ the object having its own thread of control.” The points at which an active object responds to communications from other objects is determined solely by the Behavior of the active object and not by the invoking object.

The QP framework that we have been using in this section also supports the active object design paradigm.

In your application, you can create a class. You can convert that class into an active object by deriving your application-specific class from the Active object class, which will be seen while doing the exercises.

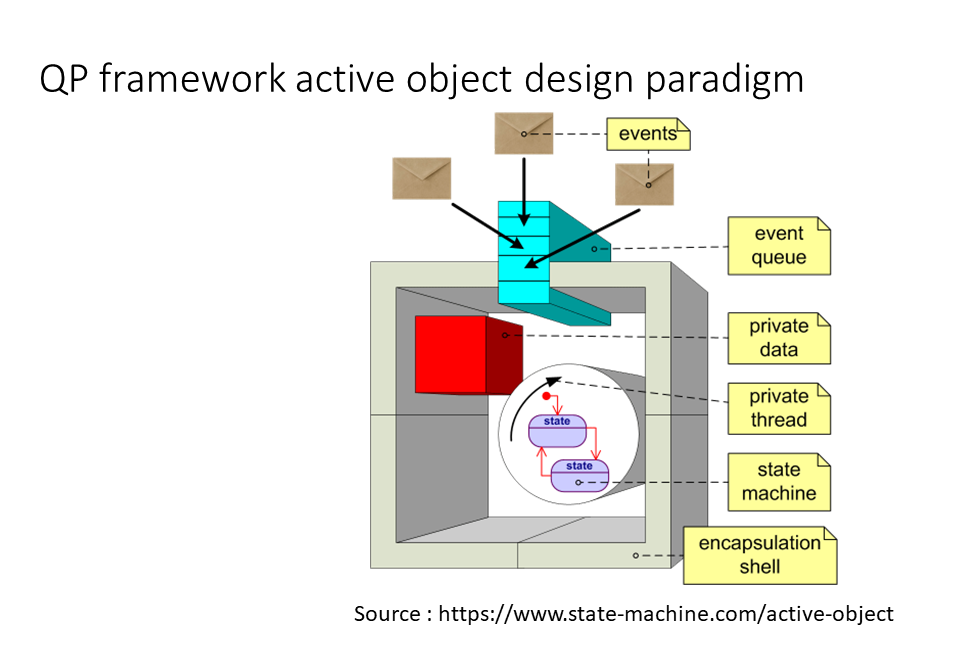

Events, event queue, private data, private thread, state machine, encapsulation shell are all the components or features of the Active object of the QP framework.

Each Active object will have its own event queue. And it can have its own private thread; it supports various underlying kernels, like co-operative kernel, pre-emptive kernel, or any third-party kernel, such as FreeRTOS or some other kernels. And each active object comes with its own state machine. And the framework gives you a lot of APIs to post events from ISRs to this event queue and from this object to another object, like that. All those things will see while coding for the exercise.

Event Posting:

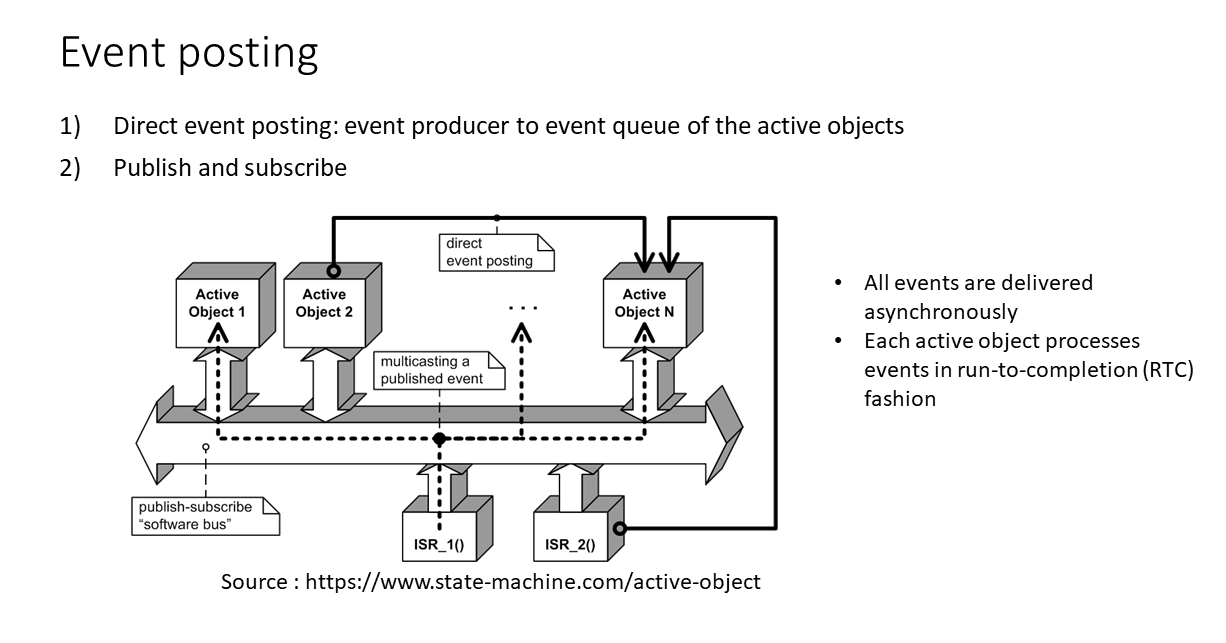

Now, let’s see how to do event posting in this framework. There are two methods. One is Direct event posting. That is, the event producer can post the events directly into the Event queue of the active objects. That is called Direct event posting.

For example, this ISR_1 can directly post to the event queue of this Active object 1. The ISR must know the address or pointer to this Active object 1. And one active object can directly post an event to another active object, which is also possible.

Please note that all events are delivered asynchronously; no polling code is involved. And each active object processes events in a run-to-completion fashion.

There is one more method of Event posting is Publish and subscribe mechanism.

For example, in this mechanism, if ISR wants to send an event, in the direct posting, it must know the address of this active object, then only it can post that event into the Event queue of that active object.

In the publish and subscribe method, this ISR needs not to know the address of any of the active objects. It can simply publish the events to the framework itself, and then the framework will forward those events to all the subscribed active objects. Active objects can subscribe to the events with the framework. The publish and subscribe mechanism works like that. To do such things, the framework gives you a lot of APIs, which you can use.

How to schedule various Active objects?

I said one thing: Active object will have its own thread of context, and it can take the underlying kernel support to run under its own thread of context.

Suppose one application has many active objects, then how to schedule those active objects. There are many methods. The framework gives different kernels.

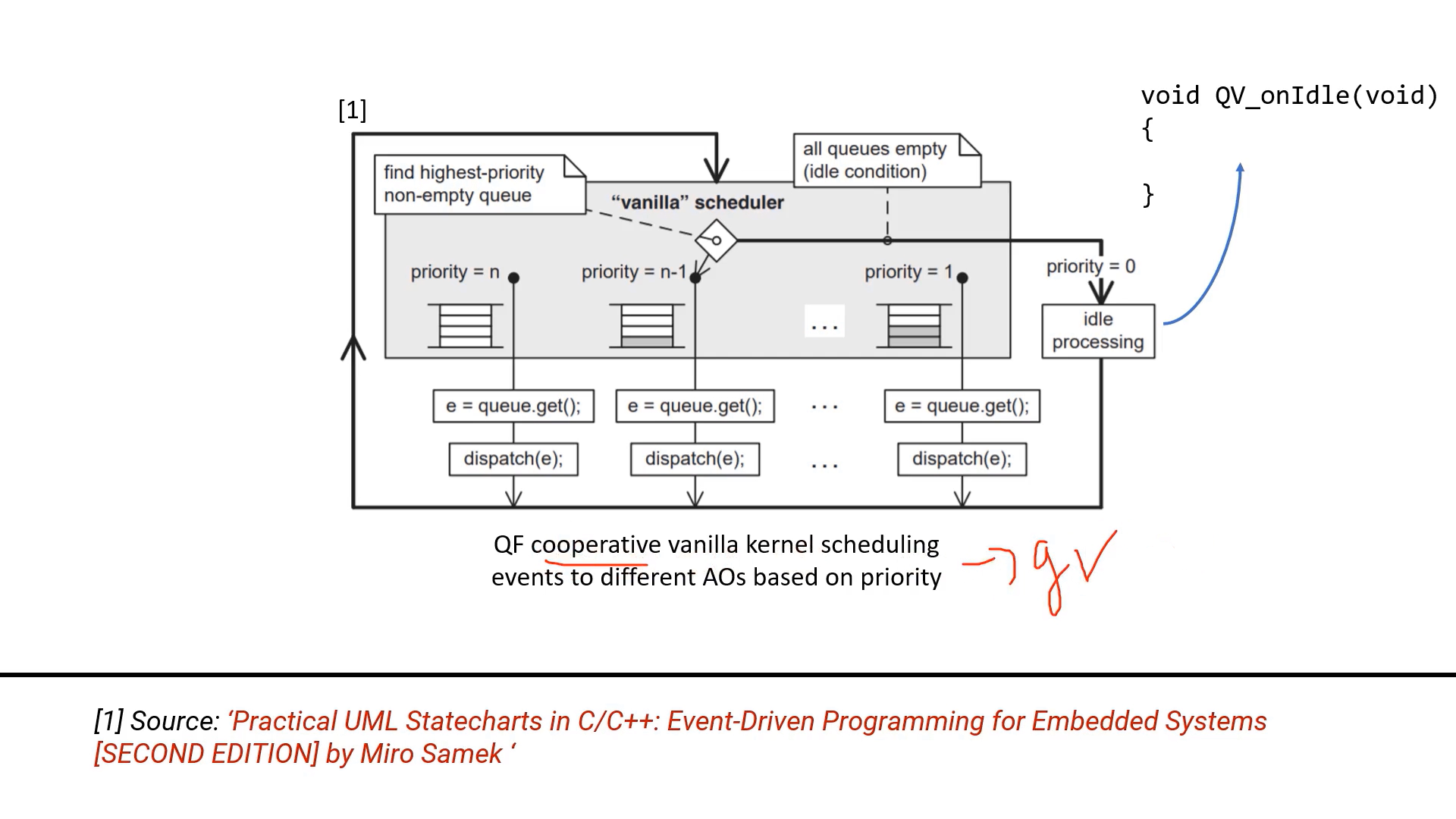

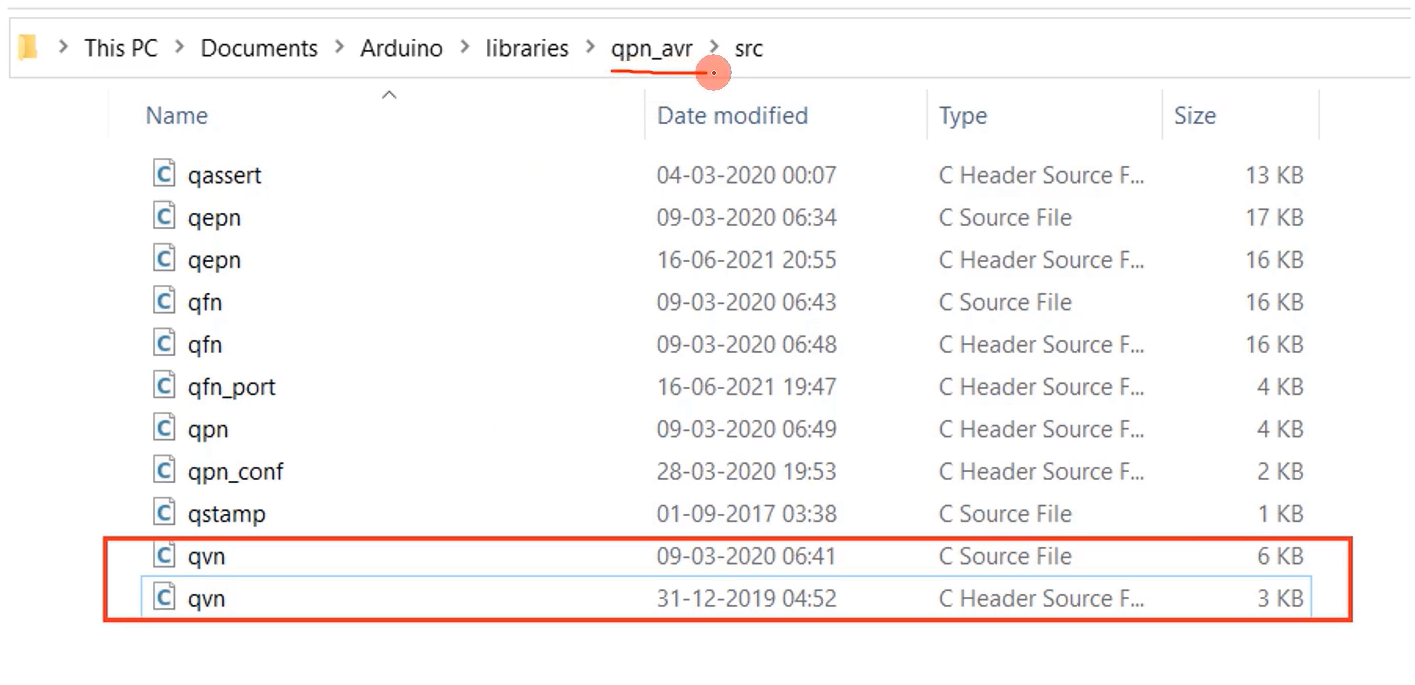

One such kernel is explained in this diagram(Figure 8). This diagram we can find in this book ‘Practical UML Statecharts in C/C++: Event-Driven Programming for Embedded Systems[SECOND EDITION] by Miro Samek’. Here, the active objects are processed in a simple cooperative manner, using a frameworks cooperative vanilla kernel. And this kernel is called the ‘qv’ kernel, and this kernel you can find in the qpn library.

You have the ‘qpn’ library. There are two files. qvn.c and qvn.h. In these files, you can find the implementation of a cooperative vanilla kernel, which is nothing but a super loop, a big loop here, as you can see (Figure 8).

And whenever you launch that cooperative vanilla kernel, the kernel first fix the active object whose priority is higher. That means you can assign different priorities to different active objects.

The scheduler first fix the active object of higher priority, and then the scheduler looks into the queue of that active object. If it finds any event, it dispatches that event to the state machine of that active object. We will be using this simple cooperative vanilla kernel for our exercise.

Now let’s redo our previous exercise using the active objects approach.

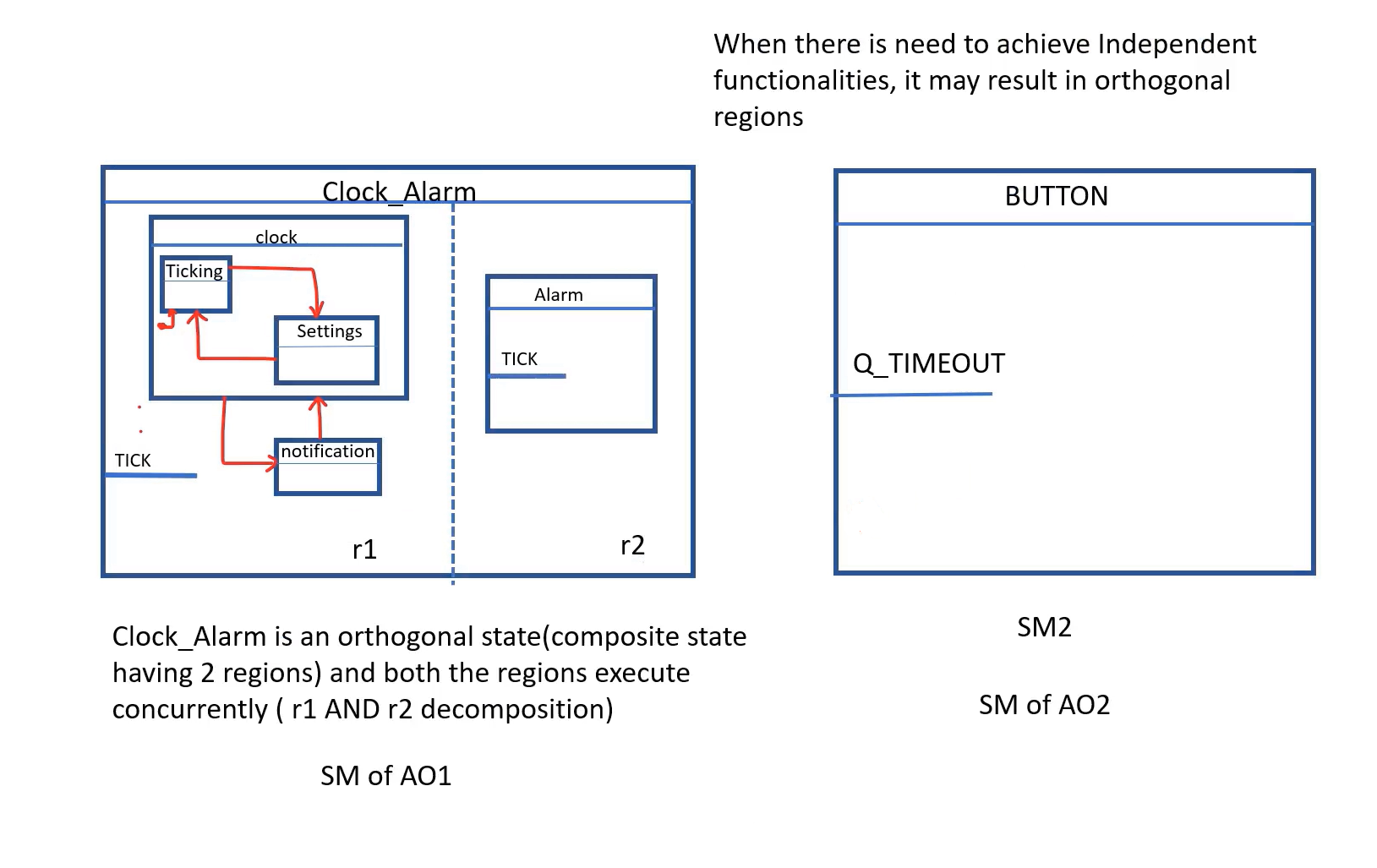

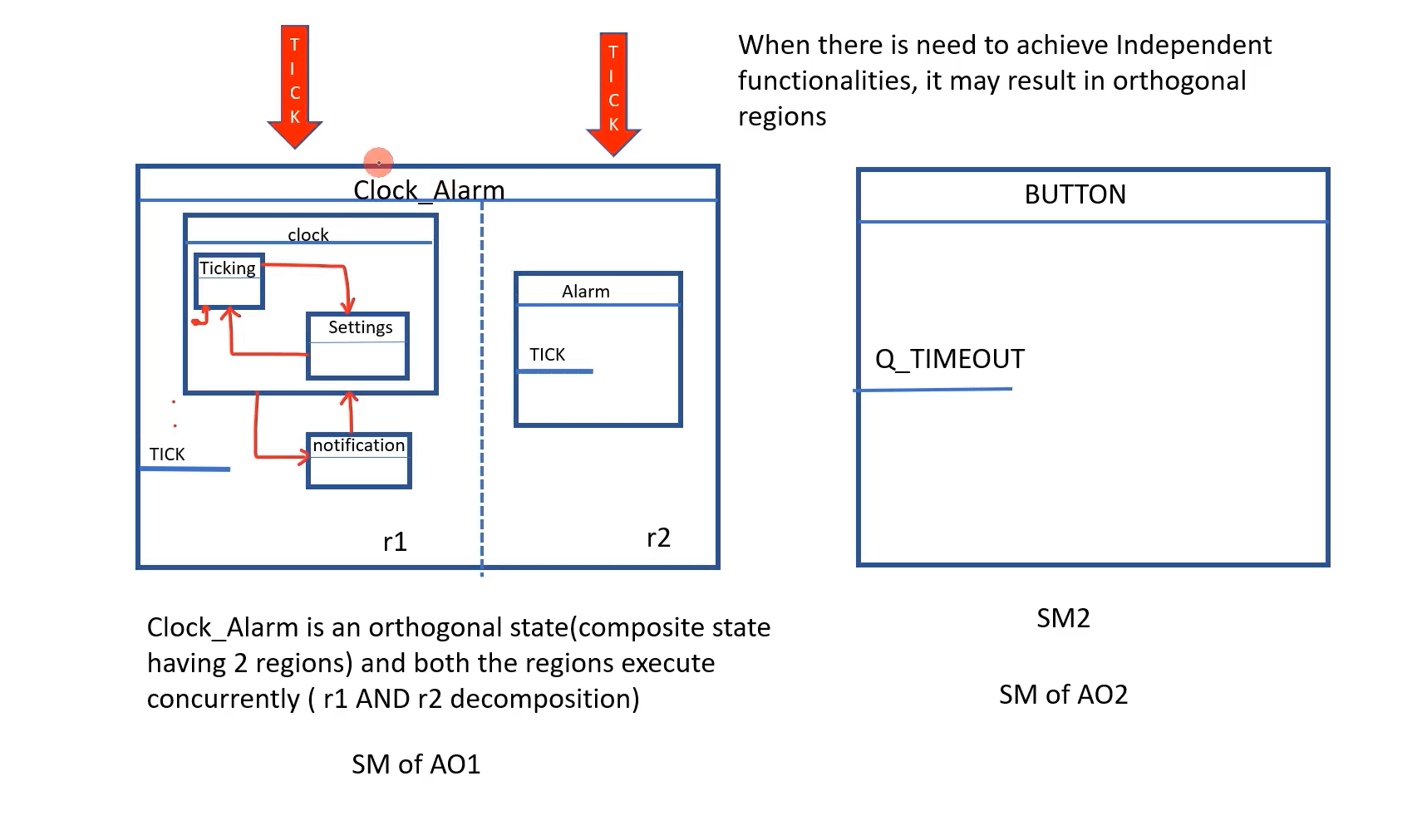

What we will be doing in this exercise is, we are going to convert Clock_Alarm class into an active object. There will be two active objects in our application now. One is Clock_Alarm; another active object is called BUTTON. I will create another class called BUTTON, and we will convert that class into an active object. There will be two active objects.

After that, one more change in this exercise will be using a Clock_Alarm as an orthogonal state. What is an Orthogonal state? Orthogonal state is nothing but it’s a composite state with two or more regions.

For example, if you consider Clock_Alarm state, Clock_Alarm is a composite state, and you can also call it as Clock_Alarm is the orthogonal state. Why is it an orthogonal state? It has got two regions, r1 and r2. And region r1 has a couple of substates, and region r2 also has a couple of substates.

Here, ‘clock’ is a substate of Clock_Alarm, and ‘Alarm’ is also a substate of Clock_Alarm. But these two states are orthogonal, or they are concurrent.

For example, if there is a TICK event, those TICK events should be consumed by both the substates concurrently. This is what we call AND decomposition. Whereas, clock state and notification state, these two are OR decomposition. This is sequential execution.

The first state machine comes to this state ‘clock’, and then it may move to the ‘notification’ state. That’s what we call a sequential state. Or the Clock_Alarm first may entered into the ‘notification’ state, and after receiving any event, it may move to the clock state. This is what we call OR decomposition. But, these two Clock and Alarm states are called AND decomposition. These two substates can run simultaneously whenever an event is received.

Sometimes when there is a need to achieve independent functionalities, it may result in an orthogonal region.

In our application, the Alarm component of our application is independent of other states. Like, Alarm may trigger at any time. It must be like a component that is always active, and it should be consuming the events parallelly to other states. That’s why we will make the Alarm substate as an orthogonal substate of the Clock_Alarm state.

So, don’t worry if you get confused. While implementing the exercise, it may get clear. And Button is another class. It’s another active object having its own event queue, and it has got its own state machine. The state machine of this Button is very simple, so I’ll explain that later.

The QP framework does not support orthogonal regions directly. The QM framework or the QM tool doesn’t support drawing such an orthogonal region.

You can use an orthogonal component state pattern which emulates orthogonal regions using the composition of classes. Composition is a term from object-oriented programming, which also means strong aggregation.

FastBit Embedded Brain Academy Courses

Click here: https://fastbitlab.com/course1